The Python Package Index (PyPI), the central repository of software for the Python programming language, was among the first open source projects to join the Datadog Open Source Partner Program back in 2017. Python plays a central role in how we build and operate at Datadog, and the language continues to be an essential part of our daily work. By supporting PyPI, we're not only helping to strengthen a critical piece of the Python ecosystem, but also recognizing the immense value the Python community has created for us and countless others.

For this article, we highlight a specific example of an investigation the PyPI team did to improve the latency of one of their API endpoints. We also sat with Ee Durbin, Director of Infrastructure at the Python Software Foundation (PSF), to discuss their involvement with the PSF and how they are using Datadog to help manage the PyPI repository and other PSF initiatives.

Investigating an Increase of Latency in a Critical API Endpoint

Earlier this year, the Python Package Index (PyPI) team noticed a gradual increase in latency on calls to the

GET /pypi/<project>/<version>/json endpoint. This is a critical API endpoint, used by a variety of actors to retrieve release metadata, including number of downloads, license information, and maintainer metadata.

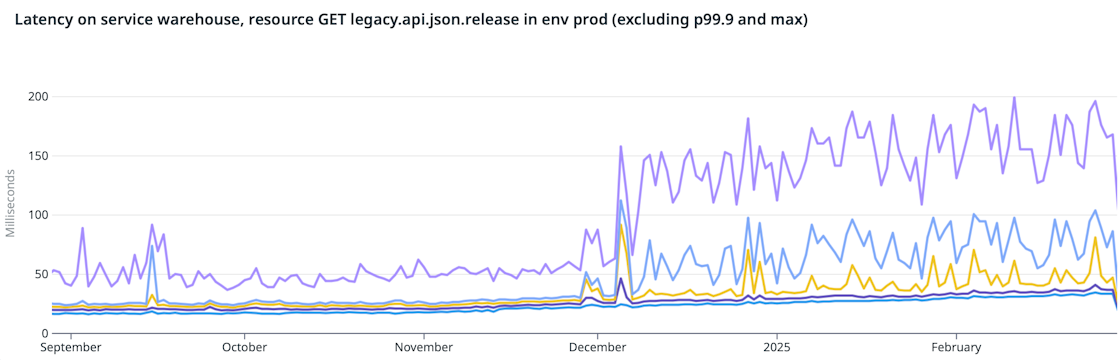

While extreme outliers were filtered out, the majority of requests showed a steady upward trend in response times:

By examining request traces, the team discovered that nearly half of the total request time was being consumed by a single database query. Datadog Database Monitoring revealed the culprit: queries were sequentially scanning the provenance table, which had grown significantly after support for provenance models was merged in September (PEP-740).

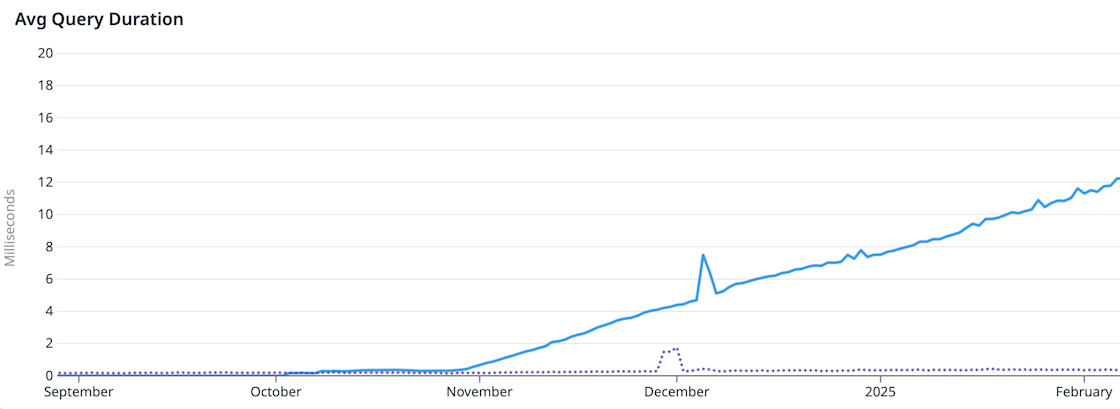

Initially, this table had neither a primary key nor indexes defined, but this didn't cause major problems, as adding digital attestations to uploaded packages wasn't enabled by default. But when the blessed GitHub Action for publishing packages to PyPI (gh-action-pypi-publish v1.11.0) switched to publishing provenance data by default in late October, the table started growing. Because each request required scanning the entire table "from the bottom up," query durations rose steadily with this growth:

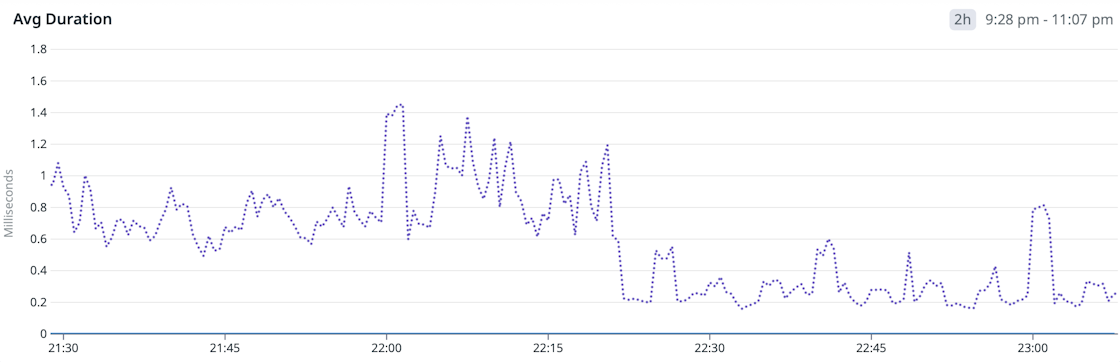

To address the issue, the team introduced an index on the provenance records. The impact was immediate: query durations dropped sharply, and latency stabilized across the board:

This investigation is a great example of how observability tools can empower open source projects to detect performance regressions, trace them back to root causes, and validate fixes with real-time data.

Interview with Ee Durbin, PSF Director of Infrastructure

How long have you been involved with the PSF?

I have been involved with the Python community for the past 14 years. Around half of that time I was a volunteer, contributing to maintenance and modernization across as many projects as I was able, including PyPI. In 2018 I joined the PSF full time as Director of Infrastructure.

When did the PSF apply for the Datadog Open Source Partner Program(OSPP)?

Until we had access to Datadog, we had very little visibility for PyPI. We had basic availability alerts that just gave us information on whether the site was up or down, basic host-level resource metrics, and some rudimentary custom metrics using statsd. But that was it.

This clearly wasn't enough to just run the site, but the real challenge arrived when we started the

Warehouse project: a full rewrite of the PyPI backend. As part of the non-functional goals of the project, we wanted to ensure that we didn't have any performance regressions, and measuring that without proper observability was going to be a problem.

When looking for alternatives I found out about Datadog's OSPP and I decided to apply on behalf of PyPI. We were approved and we started onboarding the project. We immediately gained a lot more insight into both the existing PyPI and the new project, helping us build more confidence with the migration.

Back in 2017 Datadog had just two products: infrastructure metrics and APM, so the PSF has seen the platform growing a lot in the past few years. Which of the newer features of the platform are you using?

Indeed! One of the great things about being part of the program is that we get access to the newest features and integrations as they get released, and the team is always interested in trying them out.

We are heavy users of Database Monitoring. Our database performance is critical for PyPI and being able to understand any long running queries, lock contentions, missing index, or other database bottlenecks is super useful.

Aside from Datadog Monitoring, another relatively new feature that the Python community has been using is CI Visibility. In this case, it is the CPython team that has been using the feature to better understand their test pipelines and how to improve them.

Here for the Long Haul

Datadog's partnership with the Python Software Foundation is a lasting commitment to the health of the ecosystem we rely on. By providing observability tools and ongoing support, we aim to help the PSF sustain the critical infrastructure that enables Python development worldwide.